It's 20 years since there was widespread panic that the Y2K bug might obliterate the world as we know it and plunge us all back into a dystopian Cormack-McCarthy-style bronze age.

And although it didn’t, there’s some important lessons to take from it, tech journalist Peter Griffin says.

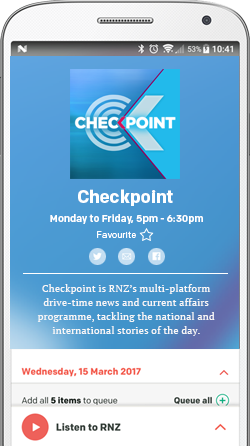

Photo: AFP / FILE

To sum it up, the Y2K problem was related to the first computer mainframes that were designed in the ‘60s and ‘70s. They helped run the big systems of banks, finance like the stock market, payroll, power stations and electricity grids, airport scheduling and big global firms like IBM, NCR, Hewlett Packard and General Motors – but not with the same degree of automation as today.

Back then, the computers didn’t have much memory – something like 48 kilobytes in comparison to today’s four or eight gigabytes on our phones, Griffin says. As a result, developers tried to reduce extra functions of computers when the software was written to help save space, he says.

“One of the things they did was instead of having four digits for the year ... they would just have 68 [instead of 1968]. And that would save them some memory, which was really expensive at the time.

“And people knew back then in the late '60s and early '70s, that it may be a problem, but it was decades away, and they expected all of these systems to be replaced in the late '70s, if not earlier.”

But as time went on, everyone was becoming more reliant on the computer systems and more complacent too, Griffin says.

“In the late '90s, you still had in banks with these green screen computers, some of them based on obsolete programming languages like Fortran, which had these two-digit placeholders for the date.

“And as we hurtle towards the millennium, that was going to become a problem, because we would roll over from 99 and instead of going 2000, we would go 00. Some of those computers would interpret that either as resetting to 1900, or they would just go haywire.

“That was the possibility, and it was a genuine fear that some people in the tech community had.”

What was known as Telecom NZ at the time, claimed it spent $100m on its billing systems and network operations centre and making sure that every cell tower had the correct time, Griffin says.

On the other hand, Microsoft – which had one of the most popular operating system, Windows 95 – also had to spend a lot of money to ensure its system was compliant but they had already been issuing regular updates quite efficiently, he says.

“But the layer underneath that, the Hewlett Packard and IBM and Mac machines that were common throughout offices in the ‘90s, the BIOS, the underlying operating system running the computer itself, all the hardware components - some of that stuff, if it dated from further back, would not have been compliant.”

In the United States, General Motors in 1999 estimated it was going to have to spend $600m to bring the systems up to date across all the connected computers around the world, Griffin says.

He says while interviewing companies in London at the time, there was a sense that they were unwillingly spending a lot of money to upgrade, because they’d been warned they’d be cut off as trusted vendors otherwise.

“It was a real problem for them, because everyone was trying to get developers at the time to go into the systems and rewrite the software to accommodate four digits. And as I said, some of these systems were really old and obscure programming languages.

“So they had to buy-in talent in many cases from Russia because they had lots of Fortran system still running. They understood the programming languages, and some people have said that this was really the start of the IT outsourcing boom.

“The theory is that this really precipitated that move to outsourcing, because from ‘96 to ‘99, hundreds of millions, if not billions of dollars, every year were flooding to these companies to fix the deficiencies in these systems.”

But when nothing really crashed on a global scale, it made people question if the tech sector had taken advantage of the situation, Griffin says.

“The tech sector in the US was lobbying politicians, lobbying the White House to say, ‘hey, we really need to mobilise business here to invest in getting their systems ready’. And that sounded like a very responsible message.

“But to what extent was that 'here's an opportunity to make money’?”

He says some people suggest the dot-com bubble burst in early 2000 was a result of all the cash going into the sector from big companies giving out contracts to maintain their systems.

What happened in New Zealand at the turn of the millennium?

Griffin says he was in Ireland when the clock struck midnight in New Zealand, watching TV with his friends.

“All mates were like, ‘wow, look at that fireworks going off in New Zealand’. But I was intently looking at Auckland City, if the lights were going to go off because obviously New Zealand was the test bed.

“We were the ones who would tell the rest of the world [if] something's gone wrong here.”

Griffin says New Zealand had set up a commission to deal with the upgrades to systems and on 31 December, the representatives were in a bunker at the beehive with a checklist of all the critical infrastructure.

“There were people in the banks in the stock exchange who stayed up all night in call centers, just sitting there, waiting for the calls to flood in that ‘the ATM network had gone down’ or whatever.

“There was arrangements with banks and financial organisations around the world, where we would call them and say ‘this is what we're seeing has gone wrong with our systems, you've got certain number of hours to fix this before you click over to midnight yourself’.

“And then there was just this silence and the phones didn't ring and none of the systems broke down."

What could have happened?

Although some popular culture might have played into the fear of a doomsday situation and exaggerated the potential consequences, Griffin says it’s hard to unpick the counterfactual of what would happen if governments didn’t do anything.

“Like Russia and Italy just sort of went, it's too hard, and actually not a lot did happen in those countries, which would lead you to suggest that actually it may not have been so bad.

“But the US had a lot more complicated systems running a lot more complicated things. So I think it's been sort of lost as to what extent [that] would have led to a meltdown or whether it would just have been a big inconvenience.

“A lot of times when a clock is incorrect on a computer, it doesn't affect the running of the computer in any way. It's just those really time sensitive things and how many critical life threatening things are actually time based back then? Not so many, these days? A heck of a lot, and if there's any lesson out of it it’s we need to understand the systems that we are building now, which are more complex than ever.”

However, some equipment at a nuclear power plant in Japan did fail and switched to a back-up system at the turn of the millennia. Griffin says it was one of those cases that made people wonder and fear the possible repercussions of the increasing reliance on technology.

“We've got no idea how a lot of that stuff works, and I'm not sure if the companies that are creating this stuff can truly understand how that decision-making comes about.

“So we need to learn from what happened to the big banking companies, insurance companies, airlines in the ‘90s when they didn't quite understand their own systems and got themselves in real trouble.”

While the development of increased interconnectivity of tech has been beneficial, it’s also a double-edged sword, Griffin says. During the imminent Y2K, individual desktops that were not connected had to rely on CD ROMs to upgrade their software, but these days we’re able to do that at the push of a button with internet access.

“But the flip side of that is that stuff can spread very quickly and if there's a problem, every device that's connected to the network can be affected very quickly.

“As we move into critical systems that are life threatening … if something goes wrong, the potential for a lot of people to be affected is much greater.”

He says while the government is looking into how Artificial Intelligence is used and oversight of it, there’s a more laissez faire approach globally.

“The next phase, I think, will be all of this great hardware and software we've created to interconnect us; do we know what it means if something goes wrong? And do we have good enough oversight of all of these systems?”