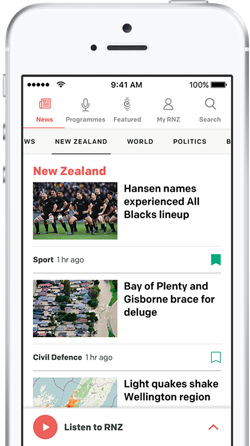

The new technology is part of Apple's iOS 17 upgrade. Photo: 123RF

A technology commentator predicts banks will start bringing in extra security measures ahead of new voice replication technology on iPhones.

From mid-September a new accessibility tool will repeat anything users type in after following 15-25 minutes of randomised voice prompts.

The technology is part of the iOS 17 upgrade, and it is just one of a range of new functions to assist people with disabilities such as those who may be losing their voice.

An early version tested by a staff member at RNZ managed to trick a phone banking voice authentication system.

Speaking to Nine to Noon Pratik Navani said there appeared to be no protection in place.

"I handed the phone to a colleague of mine yesterday and said can you try and press my locked phone, triple click the side button and see if it lets you access my voice and it did. If a phone is ideally sitting somewhere it would be very easy for someone to just within three clicks and a quick series of words get your phone to say anything in your voice, record it on another device and have that recording ready to go," Navani said.

Technology commentator Peter Griffin said an uptick in fraud or more responsibility being placed on customers would cause a shift in verification.

"That is when it's going to start to become a real issue for the industry and I think they'll be thinking about that, trying to anticipate it and tightening up the other layer of security that goes with voice verification to make sure that it doesn't become a big problem," Griffin said.

He said banks would soon introduce other ways to verify a user in conjunction with voice recognition.

"Like all artificial intelligence stuff is now appearing all over the place. It has inherently really great characteristics that we can exploit for our own productivity and cool new features like what Apple is doing , there is a flip side to that. It will be misused as well. That doesn't mean that things like voice cloning should be banned or the banks should necessarily drop it, it's just that there needs to be careful consideration," Griffin said.

Former US counter-intelligence officer Dennis Desmond agreed and said Apple and the banks needed to work more closely together to prevent accounts being hacked over the phone.

"In order to ensure that if this technology is used that there are safe guards in place, additional two-factor authentication and verification codes, the use of [an] external device rather than that specific phone in order to authenticate an individual and the measures that could also take," Desmond said.

He noted despite the fact the voice replication remained only on the device, that there were websites criminals used to bypass voice recognition security.

"Most criminals only need [to be] using Microsoft e technology which is probably in use in other countries, three seconds worth of audio recording in order to mimic somebody's voice. We've seen this type of technology used as you mentioned for kidnapping and ransom fraud where a voice comes over the phone saying 'I'm being held hostage, you need to pay this money to the kidnappers'."

Data privacy specialist Kent Newman said a user could mimic another person's voice which could cause issues for banks including scams.

"Any way that you could turn tech to capture that identity could be used to fool people into thinking they're dealing with someone that they aren't. So that would be a key issue for banking in particular but also just those relationships within families," Newman said.

Apple is taking precautions to ensure the voice data cannot be hacked - the information is stored on the device itself, rather than being uploaded to a cloud.