Artificial Intelligence is everywhere - but we can still make deliberate decisions about how we use it. Photo: 123rf

- The headline of this article was generated by AI

Editorial - Artificial Intelligence is everywhere; enmeshed in every aspect of our daily life, the world hurtling towards the next technological revolution.

We still can, however, make deliberate decisions about how we use it, for what purpose and under what level of human oversight.

Many major news media companies are flirting with the idea of AI while some have gone all the way. Some are openly using it to create and curate content, including headlines and summaries. Others still have guardrails around the creation of original content but are using it for research, transcription, data analysis, personalisation and social media moderation.

The potential benefits touted are greater efficiencies, freeing up journalists for other work like investigations, offering more individualised content, reaching new audiences, the ability to analyse large amounts of data ... and the list goes on.

There are also plenty of risks on the flipside: bias, discrimination, loss of original thought, risk to journalistic quality, job losses and just getting things wrong.

Politicians are diving in - Prime Minister Christopher Luxon and Immigration Minister Erica Stanford use AI on TikTok to promote the 'Parent Boost' visa in Mandarin, with an upfront declaration. Photo: TikTok/christopherluxonmp

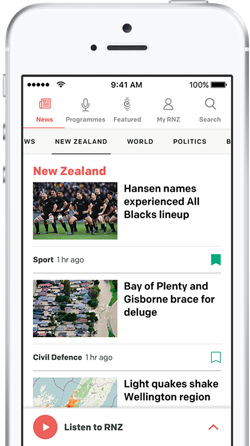

At RNZ our AI Principles are a starting point as we contemplate the future media landscape. We're taking a cautious approach in recognition of the risks to trust and reputation that come with adopting this technology.

In a nutshell, our principles allow AI to help us as we research stories, look for different people to interview, transcribe interviews, analyse large amounts of information - but not to create any content including headlines, summaries or images.

Our accuracy standards still apply so if reporters are using AI to research, they will have to treat AI generated information as unverified material and go back to the original source. Human-AI-human with checks all the way through.

We ask our teams to be very aware of security and privacy and not upload any sensitive or confidential information into AI tools. RNZ receives a significant amount of material from external sources, and we make all efforts to make sure AI generated content does not appear on our platforms, including visuals.

I generated the headline for this piece using ChatGPT, allowed under our rules to demonstrate the technology as part of a news story about AI, but this is not something we would do on a usual day in the newsroom.

We'd like to know how you feel about AI and its potential use at RNZ. Here's our Feedback page - we'd love to hear your thoughts.

The most recent research shows a high level of distrust towards AI in the media; that distrust spikes even higher for news content, especially if there is no human involved.

The BBC asked audiences in the United Kingdom, America and Australia in 2023 what they "think and want" in this space. One clear message was that using generative AI to create journalistic content was "felt to be very high risk". Concerns centred around the potential spread of misinformation, deepening of "societal division", and the replacement of human "interpretation and insight".

Those surveyed wanted three actions from media organisations deploying this technology: demonstrate the value, put people first, and act transparently.

Importantly for RNZ, they also indicated audiences were likely to hold public media to a "particularly high standard given their public-facing mission and access to public funding".

The latest Reuters Institute Digital News Report also probes audience levels of comfort. It shows people are most receptive to AI driving personalised content, if that would "make news content quicker/easier to consume and more relevant". Research from Trusting News focuses on the relationship between disclosures on content and audience trust.

The 2025 Trust in News report from the Auckland University of Technology found about 60 percent of those surveyed were "uncomfortable" with the idea of news content created "mostly by AI with some human oversight", with only 8 percent saying they would be "comfortable".

That distrust eases somewhat when asked about content "produced mainly by human journalists with AI assistance", but 35 percent were still uncomfortable, while 26 percent said they'd be ok with it.

Looking at the regulatory space, it really is the wild west out there. Media companies are busy setting up their own outposts, largely plotting their own course in lieu of any nationally consistent framework.

Overseas, news outlets are either signing content deals with AI companies or taking them to court on copyright grounds. One such battle royale is the New York Times versus OpenAI, the outcome of which will be a defining factor in how much control news companies will have over the use of their own original material, and who will reap the revenue rewards.

That's some of the big picture action. Back on the newsroom floor we can see significant benefits to using AI to help journalists level the playing field.

The number of reporters in New Zealand has dropped drastically over recent years while growing armies of communications staff control crucial information, trying to steer the narrative in favour of their company, government agency or minister. The result is less capability for in-depth, investigative reporting, fewer boots on the ground (especially in the regions), less scrutiny and so less accountability for those wielding the most power.

There are several ways AI could help to make the job more efficient, with a relatively low level of risk. Transcription of interviews is already widely used within newsrooms. Using AI to search and analyse huge caches of information, for example, a government budget, could help journalists find information politicians would rather stay buried in the hundreds of documents released each year.

Where it starts to get gnarly is when we ponder creating content with Generative AI.

This could be the text of a story - either parts of it or the entirety, an image generated to match a particular story, the bullet points or summary we put at the top of the story, or the headline. How about using AI to translate RNZ content into languages other than English, then turn it into audio, to make it more accessible to communities here and for our friends and neighbours in the Pacific?

Doing any of this at present contravenes our AI Principles except in specific circumstances.

We could do it right now - but should we? And if we went down that path, what level of disclosure would you expect? Declarations on each piece of content, or general statements about what are doing as a matter of consistent policy?

A move we have made already is to help us better manage social media comments.

Jane Patterson, RNZ's director of editorial quality and training. Photo: RNZ / Jeff McEwan

RNZ has just launched Sence, an AI-powered tool actively moderating comments in real time and identifying harmful content as well as ongoing monitoring by our social media team. Comments won't be deleted, but they will be hidden until reviewed by a human. The aim is to have a more constructive space, reduce the times we have to turn comments off, and free up our team to concentrate on creating content.

Public sentiment remains the most powerful check on news media as we balance the benefits that could turbo charge journalism against the consequences that could destroy trust. We have to get it right.

Here's a look at the approach of some companies, where there are public AI policies in place. All state the need for senior, human oversight and adherence to legal and editorial standards.

Gen AI "should not be used to create news stories, current affairs or factual journalism for RNZ"; there are exceptions for stories about/demonstrating AI with declarations, and on a case-by-case basis with senior sign-off

- AI can be used for research, transcription, ideas for talent for stories, but content cannot be created, including written text

- RNZ's policy is not to use AI generated images or visuals, and it makes all efforts to avoid receiving AI content from its external partners

- Public disclosures on any content made with, along with any relevant context

Anything "generated or substantially generated" by Gen AI will be transparently labelled. Currently used for:

- Summarising emergency service media releases, live blogs and potentially publicly available documents

- Creating story 'Fast Facts'

- Potential use or under trial: headlines, social media curation, text to voice, story generation from data, story curation, automated page layout, translation into other languages

NZME will disclose if Gen AI has been used to "write an entire article, or to produce graphics or illustrations". It says it doesn't use "AI-generated photos or videos to accompany news content". Currently used for:

- Copy checking/editing

- Headlines, summaries, processing publicly available media releases

- Analysing large data sets for investigations

- NZ Stock Exchange announcements

- Some homepage curation

If significant elements generated by AI are included in a piece of work, TVNZ "will let you know".

- Its policy would allow text or images to be created "solely" using Gen AI in which case TVNZ would "let you know". "Significant" elements will also be declared

- There will be human oversight and someone doing a final check

- If Gen AI is used for "creative" purposes, it would be made clear it was an "artist's impression"

Reuters uses AI at times in its "reporting, writing, editing, production and publishing", with disclosures when it relies "primarily or solely on Gen AI to produce news content".

The company has used its own newsrooms to test a suite of AI tools now developed for wider commercial use, including:

- To locate, edit and publish content

- Automated financial and economic alerts, including from government sources

- Technology for creating and sending news, and for filing flexibility

Its guidance gives examples where Gen AI could be acceptable, and where it would not.

Appropriate Using AI tools, explicitly indicated, to demonstrate potential uses for the audience.

Inappropriate Using them for the "wholesale creation of content" - including synthetic images, sounds or AI-generated text.

Appropriate Cloning a dead person's voice to read a diary entry in a doco about their life, with the necessary permissions and disclosures.

Inappropriate Using an AI tool to clone a real person's voice and likeness in news and information content, other than for demonstrative or illustrative use.

- The BBC has extensive AI guidance. Its current use:

- Piloting using Gen AI for sports reporting, to create "At a Glance" news summaries, and to format stories into BBC house style

- For targeted, personalised content

- To create a BBC synthetic voice for weather reports

Sign up for Ngā Pitopito Kōrero, a daily newsletter curated by our editors and delivered straight to your inbox every weekday.